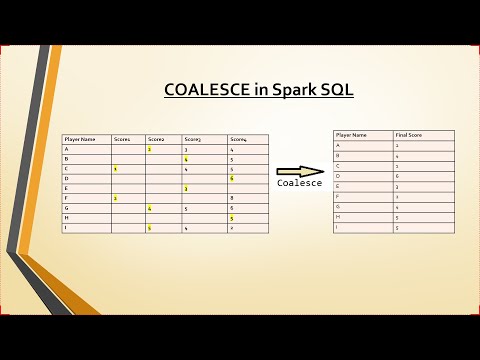

Coalesce in Spark SQL | Scala | Spark Scenario based question

Pyspark Scenarios 23 : How do I select a column name with spaces in PySpark? #pyspark #databricksПодробнее

Pyspark Scenarios 17 : How to handle duplicate column errors in delta table #pyspark #deltalake #sqlПодробнее

Pyspark Scenarios 18 : How to Handle Bad Data in pyspark dataframe using pyspark schema #pysparkПодробнее

Pyspark Scenarios 12 : how to get 53 week number years in pyspark extract 53rd week number in sparkПодробнее

Pyspark Scenarios 1: How to create partition by month and year in pyspark #PysparkScenarios #PysparkПодробнее

Pyspark Scenarios 9 : How to get Individual column wise null records count #pyspark #databricksПодробнее

Pyspark Scenarios 11 : how to handle double delimiter or multi delimiters in pyspark #pysparkПодробнее

Pyspark Scenarios 16: Convert pyspark string to date format issue dd-mm-yy old format #pysparkПодробнее

Pyspark Scenarios 21 : Dynamically processing complex json file in pyspark #complexjson #databricksПодробнее

Pyspark Scenarios 13 : how to handle complex json data file in pyspark #pyspark #databricksПодробнее

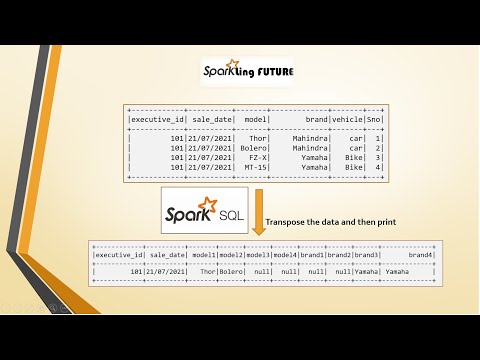

Using Pivot() in Spark SQL with Scala | Scenario based questionПодробнее

When-Otherwise | Case When end | Spark with Scala | Scenario-based questionsПодробнее

Spark Scenario Based Question | Spark SQL Functions - Coalesce | Simplified method | LearntoSparkПодробнее

Difference between Coalesce and Repartition- Hadoop Interview questionПодробнее

Pivot in Spark DataFrame | Spark Interview Question | Scenario Based | Spark SQL | LearntoSparkПодробнее

Spark Interview Question | Scenario Based | Data Masking Using Spark Scala | With Demo| LearntoSparkПодробнее

10 frequently asked questions on spark | Spark FAQ | 10 things to know about SparkПодробнее

Spark Scenario Based Question | Replace Function | Using PySpark and Spark With Scala | LearntoSparkПодробнее