Disabling vSphere Flash Read Cache caching in a virtual machine KB2057840

【VMware vSphere】FLASH READ CACHE - SuperMicro 1029U - SETTING UP RAID GROUP RAID1 & RAID10 [1/3]Подробнее

![【VMware vSphere】FLASH READ CACHE - SuperMicro 1029U - SETTING UP RAID GROUP RAID1 & RAID10 [1/3]](https://img.youtube.com/vi/Y1P1rKED2wo/0.jpg)

Allocating vSphere Flash Read Cache to a virtual machine KB20515272Подробнее

23-Configuring Flash Cache in vSphere6Подробнее

Virtual Flash feature in vSphere 5 5 KB 2058983Подробнее

How to log in vMware Flash based interface when Flash player goes EOLПодробнее

Demo of vSphere 5.5's New Flash Read CacheПодробнее

Changed Block Tracking Restore with VDP Advanced - VMware vSphere Data ProtectionПодробнее

VMware vSphere Data Protection 6.0 - Restoring a Virtual MachineПодробнее

vSAN Operations Guide: Converting from Hybrid to All FlashПодробнее

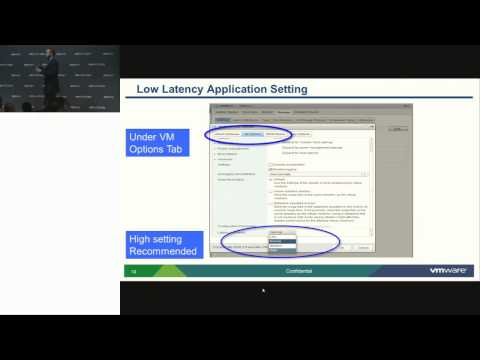

VMworld 2013: Session VSVC4605 - What's New in VMware vSphere?Подробнее

VM V-Sphere 5.5- IT 2 Minute WarningПодробнее

vSphere 6 x vFlash Pool ManagementПодробнее

Understand and Avoid Common Issues with Virtual Machine EncryptionПодробнее

Deploy VMware's vCenter Server Appliance 5.5 in a home lab, without SSO errorsПодробнее

LLM inference optimization: Architecture, KV cache and Flash attentionПодробнее