Extending Context Window of Large Language Models via Positional Interpolation Explained

LLMs | Long Context LLMs: Challenges & Solutions | Lec 20Подробнее

[#94-1] LLMs with 32K tokens context windows. Llama2, Tokenizers, FastAttention-2, Together (1 of 3)Подробнее

![[#94-1] LLMs with 32K tokens context windows. Llama2, Tokenizers, FastAttention-2, Together (1 of 3)](https://img.youtube.com/vi/UOJcoIxSqAg/0.jpg)

[#94-3] Creating applications with LLMs and large context windows (32K) via fine-tuning (3 out of 3)Подробнее

![[#94-3] Creating applications with LLMs and large context windows (32K) via fine-tuning (3 out of 3)](https://img.youtube.com/vi/szwu2e93D4c/0.jpg)

[#94-2] Llama2-7B-32K: "Position Interpolation" Explained (2 out of 3)Подробнее

![[#94-2] Llama2-7B-32K: 'Position Interpolation' Explained (2 out of 3)](https://img.youtube.com/vi/oa2PevyHicc/0.jpg)

LLaMA 2 New Open Source Large Language Model with 32K Context WindowПодробнее

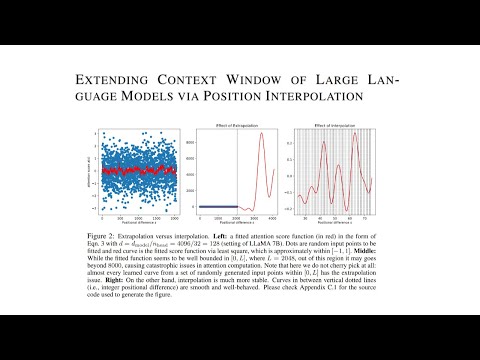

Extending Context Window of Large Language Models via Position InterpolationПодробнее

Innovation in the LocalLlama SubredditПодробнее