Fine Tuning Phi 1_5 with PEFT and QLoRA | Large Language Model with PyTorch

QLoRA—How to Fine-tune an LLM on a Single GPU (w/ Python Code)Подробнее

LoRA - Low-rank Adaption of AI Large Language Models: LoRA and QLoRA Explained SimplyПодробнее

Fine-tuning Large Language Models (LLMs) | w/ Example CodeПодробнее

Fine-tuning LLMs with PEFT and LoRAПодробнее

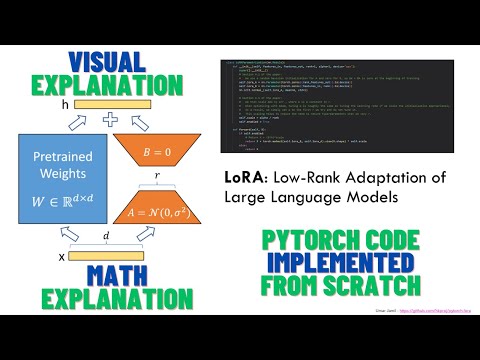

LoRA: Low-Rank Adaptation of Large Language Models - Explained visually + PyTorch code from scratchПодробнее

Fine-Tune Large LLMs with QLoRA (Free Colab Tutorial)Подробнее

Steps By Step Tutorial To Fine Tune LLAMA 2 With Custom Dataset Using LoRA And QLoRA TechniquesПодробнее

LoRA & QLoRA Fine-tuning Explained In-DepthПодробнее

Fine Tuning LLM Models – Generative AI CourseПодробнее

LoRA explained (and a bit about precision and quantization)Подробнее

Fine-tuning Llama 2 on Your Own Dataset | Train an LLM for Your Use Case with QLoRA on a Single GPUПодробнее

Fine-tuning LLMs with PEFT and LoRA - Gemma model & HuggingFace datasetПодробнее

Low-rank Adaption of Large Language Models: Explaining the Key Concepts Behind LoRAПодробнее

"okay, but I want GPT to perform 10x for my specific use case" - Here is howПодробнее