Jamba First Production Grade MAMBA LLM SSM-Transformer LLM MAMBA + Transformers + MoE

Decoding JAMBA Hybrid Transformer - Mamba Based Model From @ai21labs97 | Architecture Of Jamba ModelПодробнее

Attention!!! JAMBA Instruct - Mamba LLM's new Baby!!!Подробнее

Jamba V0.1 New Breakthrough For LLM With Mamba And Transformer ArchitectureПодробнее

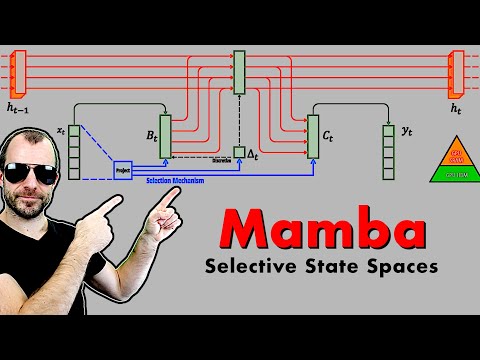

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)Подробнее

Mamba complete guide on colabПодробнее

Mamba Might Just Make LLMs 1000x Cheaper...Подробнее

JAMBA MoE: Open Source MAMBA w/ Transformer: CODEПодробнее

The FIRST Production-grade Mamba-based LLM!!!Подробнее

Jamba 1.5 is out - Hybrid SSM-Transformer ModelsПодробнее

Pioneering a Hybrid SSM Transformer ArchitectureПодробнее

[QA] Jamba: A Hybrid Transformer-Mamba Language ModelПодробнее

![[QA] Jamba: A Hybrid Transformer-Mamba Language Model](https://img.youtube.com/vi/KhCFZePyk5Q/0.jpg)

Mamba vs. Transformers: The Future of LLMs? | Paper Overview & Google Colab Code & Mamba ChatПодробнее

[2024 Best AI Paper] MoE-Mamba: Efficient Selective State Space Models with Mixture of ExpertsПодробнее

![[2024 Best AI Paper] MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts](https://img.youtube.com/vi/pmkFNzoaGLg/0.jpg)

Jamba MoE 16x12B 🐍: INSANE Single GPU Capability | Is Mamba the FUTURE of AI?Подробнее

Jamba: A Hybrid Transformer-Mamba Language Model (White Paper Explained)Подробнее

Mamba Beat Transformer Bring LLMs To Next LevelПодробнее