LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

LLAMA vs Transformers: Exploring the Key Architectural Differences (RMS Norm, GQA, ROPE, KV Cache)Подробнее

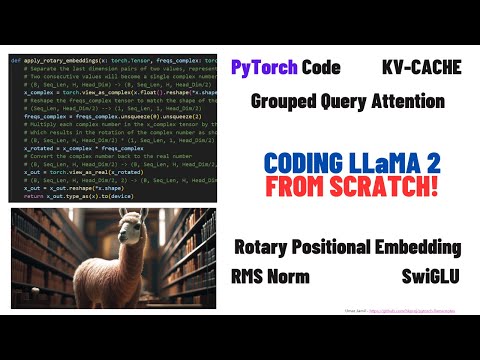

Coding LLaMA 2 from scratch in PyTorch - KV Cache, Grouped Query Attention, Rotary PE, RMSNormПодробнее

Transformer Architecture: Fast Attention, Rotary Positional Embeddings, and Multi-Query AttentionПодробнее