LLM Compendium: Tokens, Tokenizers and Tokenization

Let's build the GPT TokenizerПодробнее

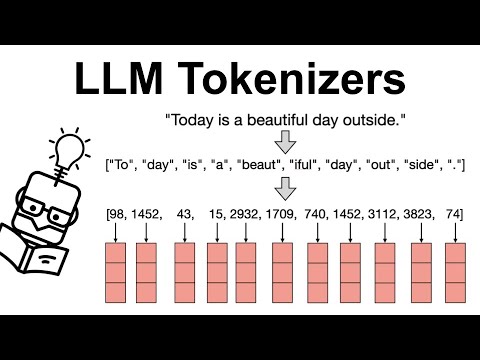

LLM Tokenizers Explained: BPE Encoding, WordPiece and SentencePieceПодробнее

Parameters vs Tokens: What Makes a Generative AI Model Stronger? 💪Подробнее

Natural Language Processing - Tokenization (NLP Zero to Hero - Part 1)Подробнее

How Tokenization Work in LLM - Complete TutorialПодробнее

Understanding BERT Embeddings and Tokenization | NLP | HuggingFace| Data Science | Machine LearningПодробнее

How AI Models Understand Language - Inside the World of Parameters and TokensПодробнее

LLM Module 0 - Introduction | 0.5 TokenizationПодробнее

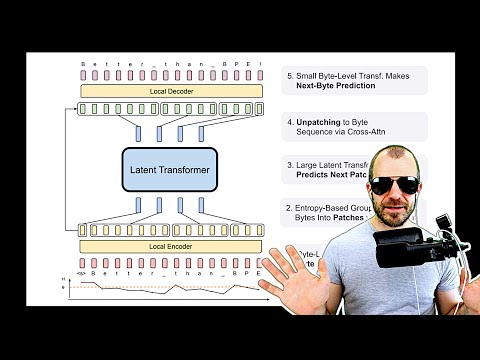

Byte Latent Transformer (BLT) by Meta AI - A Tokenizer-free LLMПодробнее

What is Token in LLM & GenAI?Подробнее

How Large Language Models WorkПодробнее

GPT-2 to GPT-4: How Smarter Tokenization Halved Token UsageПодробнее

Explained: AI Tokens & Optimizing AI CostsПодробнее

How Do LLMs TOKENIZE Text? | WordPiece, SentencePiece & Subword Explained!Подробнее

Byte Pair Encoding TokenizationПодробнее

What Are Tokens in Large Language Models? #llm #aiПодробнее

Generative AI Simplified - tokens, embeddings, vectors and similarity searchПодробнее

Byte Latent Transformer: Patches Scale Better Than Tokens (Paper Explained)Подробнее