LLMs | Mixture of Experts(MoE) - I | Lec 10.1

LLMs | Mixture of Experts(MoE) - II | Lec 10.2Подробнее

Mistral 8x7B Part 1- So What is a Mixture of Experts Model?Подробнее

Unraveling LLM Mixture of Experts (MoE)Подробнее

1 Million Tiny Experts in an AI? Fine-Grained MoE ExplainedПодробнее

Stanford CS229 I Machine Learning I Building Large Language Models (LLMs)Подробнее

What are Mixture of Experts (GPT4, Mixtral…)?Подробнее

Soft Mixture of Experts - An Efficient Sparse TransformerПодробнее

Mixture of Experts LLM - MoE explained in simple termsПодробнее

Stanford CS25: V1 I Mixture of Experts (MoE) paradigm and the Switch TransformerПодробнее

Fast Inference of Mixture-of-Experts Language Models with OffloadingПодробнее

How Large Language Models WorkПодробнее

[2024 Best AI Paper] Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLMПодробнее

![[2024 Best AI Paper] Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM](https://img.youtube.com/vi/3l08nxNBsAk/0.jpg)

Fine-Tuning LLMs Performance & Cost Breakdown with Mixture-of-ExpertsПодробнее

sparsity in deep neural networks, qsparse#neuralnetwork#sparse#derananews#weight#pruning#moe#llm#aiПодробнее

Understanding Mixture of ExpertsПодробнее

Mistral / Mixtral Explained: Sliding Window Attention, Sparse Mixture of Experts, Rolling BufferПодробнее

Mixture-of-Agents (MoA) Enhances Large Language Model CapabilitiesПодробнее

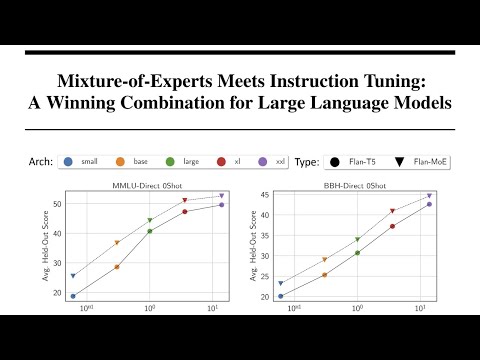

Mixture-of-Experts Meets Instruction Tuning: A Winning Combination for LLMs ExplainedПодробнее