Testing Stable Diffusion Inference Performance with Latest NVIDIA Driver including TensorRT ONNX

Accelerate Stable Diffusion with NVIDIA RTX GPUsПодробнее

Double Your Stable Diffusion Inference Speed with RTX Acceleration TensorRT: A Comprehensive GuideПодробнее

stable diffusion mojo vs stable diffusion onnx inference testsПодробнее

A1111: nVidia TensorRT Extension for Stable Diffusion (Tutorial)Подробнее

2X SPEED BOOST for SDUI | TensorRT/Stable Diffusion Full Guide | AUTOMATIC1111Подробнее

How To Increase Inference Performance with TensorFlow-TensorRTПодробнее

TensorRT for Beginners: A Tutorial on Deep Learning Inference OptimizationПодробнее

How to Run Stable-Diffusion using TensorRT and ComfyUIПодробнее

70+ FPS EVA02 Large Model Inference with ONNX + TensorRTПодробнее

Inference Optimization with NVIDIA TensorRTПодробнее

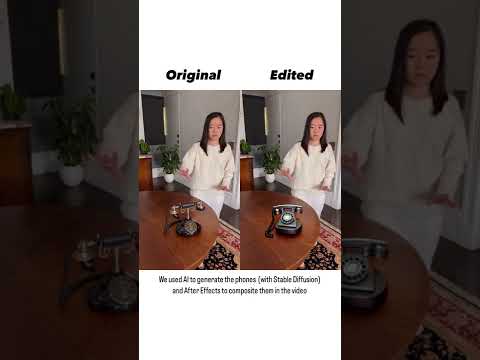

Testing Stable Diffusion inpainting on video footage #shortsПодробнее

Generate Images Faster with Stable Diffusion and RTXПодробнее

Getting Started with NVIDIA Torch-TensorRTПодробнее

How to Deploy HuggingFace’s Stable Diffusion Pipeline with Triton Inference ServerПодробнее