The Best Way to Deploy AI Models (Inference Endpoints)

Edge AI Inference Endpoint Part 1: Deploy and Serve Models to the Edge in WallarooПодробнее

Vertex Ai: Model Garden , Deploy Llama3 8b to Inference Point #machinelearning #datascienceПодробнее

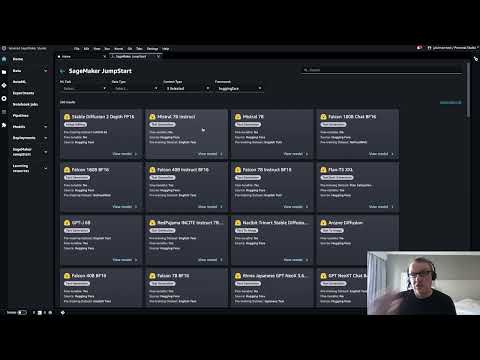

#3-Deployment Of Huggingface OpenSource LLM Models In AWS Sagemakers With EndpointsПодробнее

Deploy Hugging Face models on Google Cloud: from the hub to Inference EndpointsПодробнее

Exploring the Latency/Throughput & Cost Space for LLM Inference // Timothée Lacroix // CTO MistralПодробнее

Beginner's Guide to DS, ML, and AI - [3] Deploy Inference Endpoint on HuggingFaceПодробнее

![Beginner's Guide to DS, ML, and AI - [3] Deploy Inference Endpoint on HuggingFace](https://img.youtube.com/vi/382yy-mCeCA/0.jpg)

Deploy Hugging Face models on Google Cloud: from the hub to Vertex AIПодробнее

🤗 Hugging Cast S2E3 - Deploying LLMs on Google CloudПодробнее

Deploying Llama3 with Inference Endpoints and AWS Inferentia2Подробнее

Deploy Hugging Face models on Google Cloud: directly from Vertex AIПодробнее

SageMaker JumpStart: deploy Hugging Face models in minutes!Подробнее

Azure ML: deploy Hugging Face models in minutes!Подробнее

How to deploy LLMs (Large Language Models) as APIs using Hugging Face + AWSПодробнее

Azure ML Deploy Inference EndpointПодробнее

Deploy LLM to Production on Single GPU: REST API for Falcon 7B (with QLoRA) on Inference EndpointsПодробнее

Leveraging ML Inference for Generative AI on AWS - AWS ML Heroes in 15Подробнее

Deploy ML model in 10 minutes. ExplainedПодробнее

MLOps with the Hugging Face Ecosystem (Merve Noyan)Подробнее

The EASIEST Way to Deploy AI Models from Hugging Face (No Code)Подробнее