The inner workings of LLMs explained - VISUALIZE the self-attention mechanism

[한글자막] The inner workings of LLMs explained VISUALIZE the self attention mechanismПодробнее

![[한글자막] The inner workings of LLMs explained VISUALIZE the self attention mechanism](https://img.youtube.com/vi/a9in6FJyjG4/0.jpg)

The Attention Mechanism for Large Language Models #AI #llm #attentionПодробнее

Attention mechanism: OverviewПодробнее

LLM Foundations (LLM Bootcamp)Подробнее

How Large Language Models WorkПодробнее

Attention Mechanism In a nutshellПодробнее

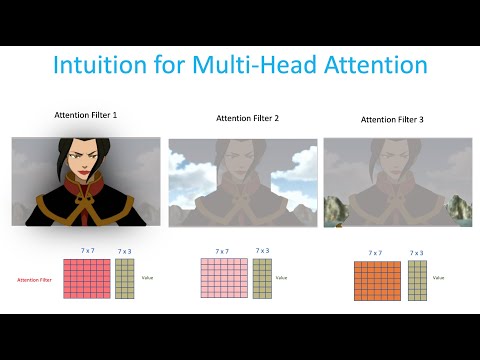

Visualize the Transformers Multi-Head Attention in ActionПодробнее

How ChatGPT Works Technically | ChatGPT ArchitectureПодробнее

Attention is all you need (Transformer) - Model explanation (including math), Inference and TrainingПодробнее

What is Attention in LLMs? Why are large language models so powerfulПодробнее

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-AttentionПодробнее

[1hr Talk] Intro to Large Language ModelsПодробнее

![[1hr Talk] Intro to Large Language Models](https://img.youtube.com/vi/zjkBMFhNj_g/0.jpg)