Why Mixture of Experts? Papers, diagrams, explanations.

Mixture of Nested Experts: Adaptive Processing of Visual Tokens | AI Paper ExplainedПодробнее

Understanding Mixture of ExpertsПодробнее

Mixture of Experts LLM - MoE explained in simple termsПодробнее

Soft Mixture of Experts - An Efficient Sparse TransformerПодробнее

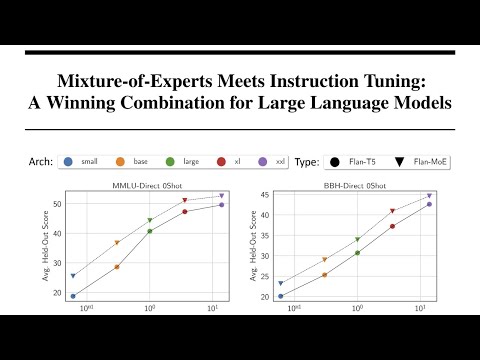

Mixture-of-Experts Meets Instruction Tuning: A Winning Combination for LLMs ExplainedПодробнее

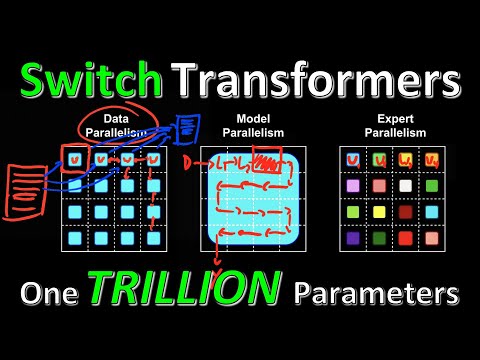

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient SparsityПодробнее

Research Paper Deep Dive - The Sparsely-Gated Mixture-of-Experts (MoE)Подробнее