Extending Transformer Context with Rotary Positional Embeddings

How Rotary Position Embedding Supercharges Modern LLMsПодробнее

RoPE Rotary Position Embedding to 100K context lengthПодробнее

Self-Extend LLM: Upgrade your context lengthПодробнее

Context extension challenges in Large Language ModelsПодробнее

YaRN: Efficient Context Window Extension of Large Language ModelsПодробнее

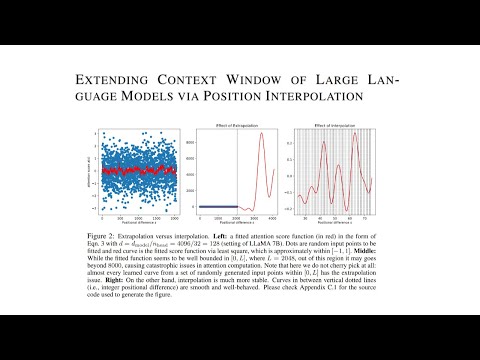

Extending Context Window of Large Language Models via Positional Interpolation ExplainedПодробнее

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMsПодробнее